GPU/Neocloud Billing using Rafay’s Usage Metering APIs

Cloud providers offering GPU or Neo Cloud services need accurate and automated mechanisms to track resource consumption.

Delivering AI use cases to market faster is a constant request for enterprises and cloud service providers who are either looking to accelerate application delivery internally or do so for their customers to have a competitive advantage.

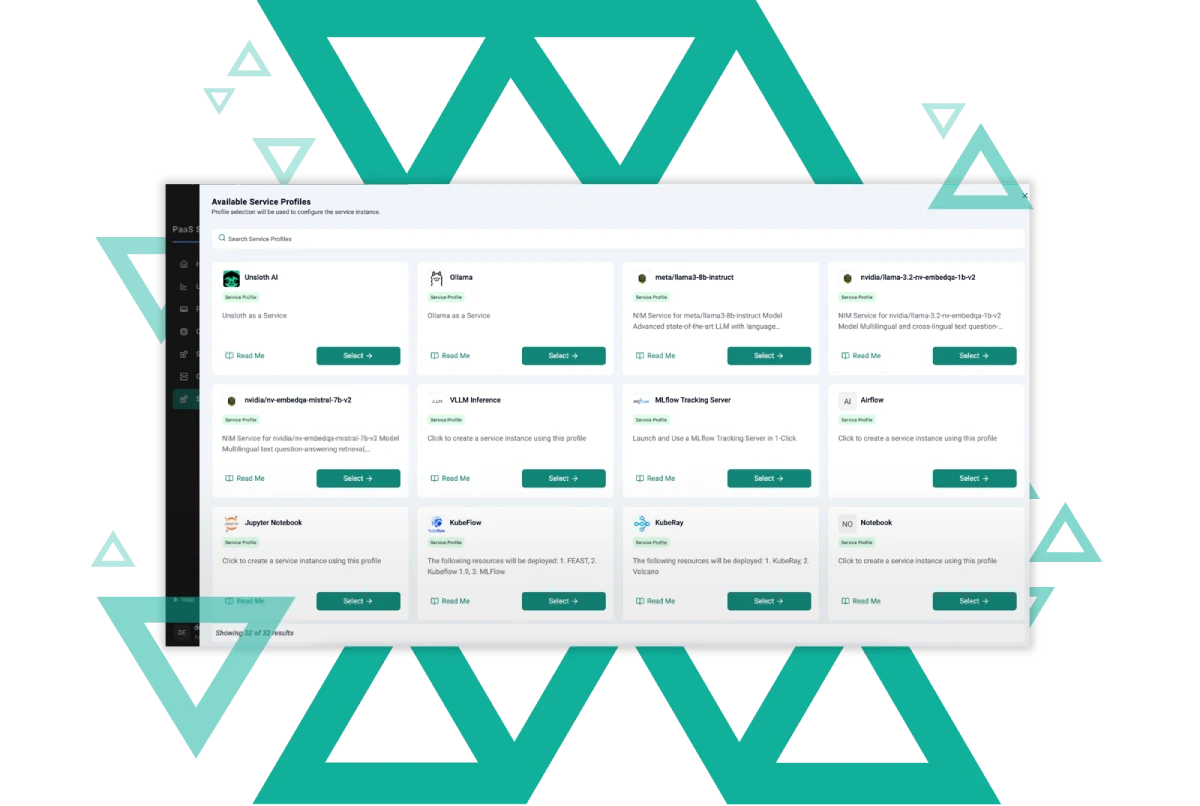

The Rafay Platform's vast library of Generative AI, compute consumption, and infrastructure management built in offers customers "as a Service" experiences at every layer of the stack, including ready-made templates for GenAI use cases to speed up their enterprise AI journey.

Launch GPU-as-a-Service, Serverless Inferencing, and AI Marketplaces in days—not months with the Rafay Platform. Deliver self-service environments (EaaS) for developers, ML teams, and platform users while supporting AI/ML training, model deployment, and GenAI inference across multiple environments.

Developers and data scientists can deploy, view, and manage their GenAI applications and infrastructure in isolation using self-service workflows.

Teams can create environment and Kubernetes blueprints that brings standardization and consistency across any EKS, AKS, GKE or private data center or edge location.

It is incredibly common for enterprises to have different teams share clusters – perhaps with specific LLM resources – in an effort to save costs. The Rafay Platform's multi-modal, multi-tenancy capabilities can easily support many AI/ML teams on the same Kubernetes cluster.

Benefits

Experience unparalleled efficiency and cost savings with AI infrastructure management features that simplify operations while enhancing performance across all environments.

Cloud providers offering GPU or Neo Cloud services need accurate and automated mechanisms to track resource consumption.

Agentic AI is the next evolution of artificial intelligence—autonomous AI systems composed of multiple AI agents that plan, decide, and execute complex tasks with minimal human intervention.

Whether you’re training deep learning models, running simulations, or just curious about your GPU’s performance, nvidia-smi is your go-to command-line tool.

Find answers to your most pressing questions about self-service compute consumption.

AI Infrastructure refers to the underlying systems and technologies that support AI applications. This includes hardware, software, and network resources that enable data processing and machine learning. A robust AI Infrastructure is essential for efficient and scalable AI deployments.

The benefits of a well-structured AI Infrastructure include improved efficiency, scalability, and cost-effectiveness. It enables organizations to leverage AI capabilities without significant upfront investments. Additionally, it supports faster decision-making and innovation.

Yes, AI Infrastructure is designed to be scalable. Organizations can easily expand their resources to accommodate growing data and processing needs. This flexibility ensures that businesses can adapt to changing demands without disruption.

To get started with AI Infrastructure, assess your organization's needs and goals. Next, choose the right tools and technologies that align with your objectives. Finally, implement a strategy that includes training and support for your team.

We're here to help you with any inquiries.