Get Started with BioContainers using Rafay

In this step-by-step guide, the Bioinformatics data scientist will use Rafay’s end user portal to launch a well resourced remote VM and run a series of BioContainers with Docker.

read More

The Rafay Platfrom transforms GPU infrastructure into a secure, multi-tenant, revenue-ready cloud. Cloud providers, neoclouds, and Sovereign AI clouds who partner with Rafay are delivering CSP-grade use cases to their user communities. Learn how Rafay helps power the most innovative GPU providers in the world.

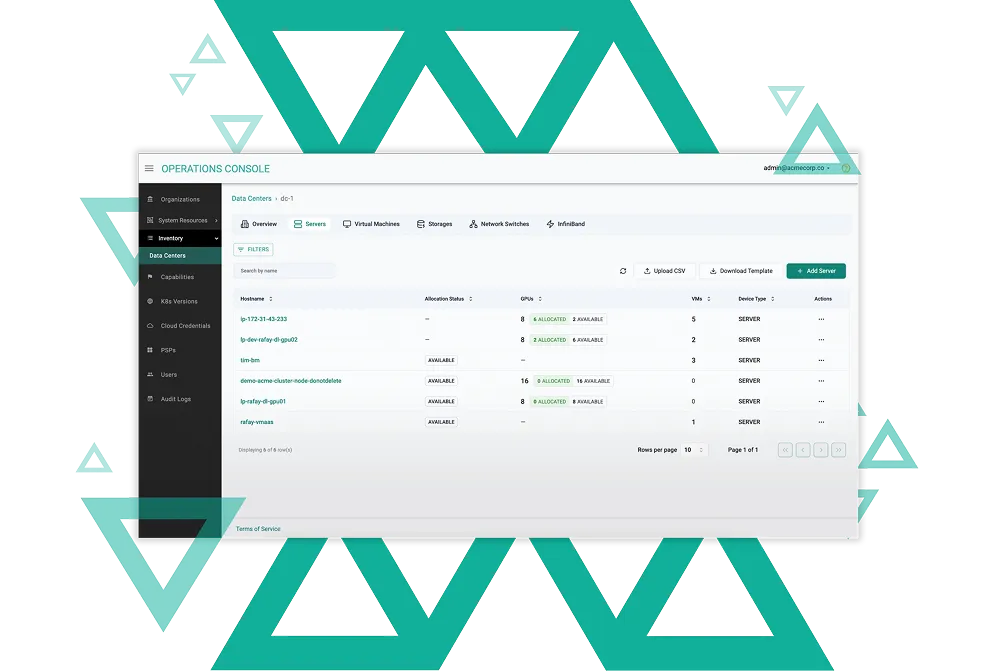

Onboard GPU and CPU resources from data centers, public clouds, or colocation into a single control plane. Standardize and unify infrastructure for easier governance.

Create standardized compute and application packages such as training, inference, or RAG workloads, complete with networking, storage, and policy enforcement.

Maximize GPU utilization with dedicated, shared, or fractional GPU allocation. Rafay ensures the right workload lands on the right compute at the right time.

Expose services through APIs or branded portals. Enable developers and data scientists to instantly access GPU-backed environments while maintaining governance and control.

The Rafay Platform provides the orchestration and workflow automation required for GPU clouds to turn static compute into enterprise-grade, centrally governed, self-service environments so costly hardware is turned into a means for generating business value and higher revenues.

Give developers and data scientists cloud-like access to GPU resources via catalogs, no IT tickets required.

Package and deliver inference APIs, LLMs, and vertical AI apps using NVIDIA NIM, Run:AI, or custom frameworks.

Enable secure isolation, fine-grained access controls, quota enforcement, and chargeback across customers, teams, and workloads.

The Rafay Platform is designed to address the most complex requirements from the most demanding cloud customers. Rafay's customers have multiple deployment options available to them including:

Find answers to common questions about our GPU Cloud Orchestration services below.

GPU orchestration refers to the automated management of GPU resources in cloud environments. It allows for efficient allocation, scaling, and monitoring of GPU workloads. This ensures optimal performance and cost-effectiveness for enterprises.

Our orchestration platform integrates seamlessly with your existing infrastructure. It leverages intelligent algorithms to allocate GPU resources based on demand. This dynamic approach enhances operational efficiency and reduces idle resources.

The primary benefits include improved resource utilization, reduced operational costs, and enhanced scalability. Additionally, it simplifies management tasks, allowing teams to focus on innovation. Overall, it accelerates project timelines and boosts productivity.

Yes, our GPU orchestration platform is designed with security in mind. We implement robust security protocols to protect your data and resources. Regular audits and compliance checks ensure that your operations remain secure.

Getting started is easy! Simply sign up for a demo or contact our sales team. We'll guide you through the setup process and help you optimize your GPU resources.